EleutherAI Releases GPT-NeoX-20B, A 20-billion-parameter AI Language

Por um escritor misterioso

Descrição

GPT-NeoX-20B, a 20-billion parameter natural language processing (NLP) AI model similar to GPT-3, has been publicly sourced

N] EleutherAI announces a 20 billion parameter model, GPT-NeoX-20B, with weights being publicly released next week : r/MachineLearning

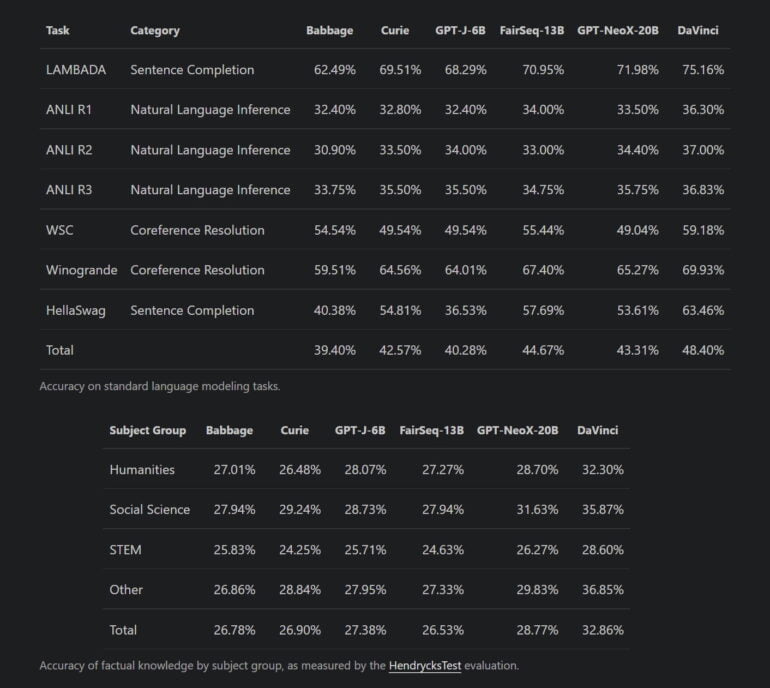

Review — GPT-NeoX-20B: An Open-Source Autoregressive Language Model, by Sik-Ho Tsang

N] EleutherAI announces a 20 billion parameter model, GPT-NeoX-20B, with weights being publicly released next week : r/MachineLearning

Eleuther AI NLP Models –

Everything You Need to Know about Open AIs ChatGPT

Translation middleman

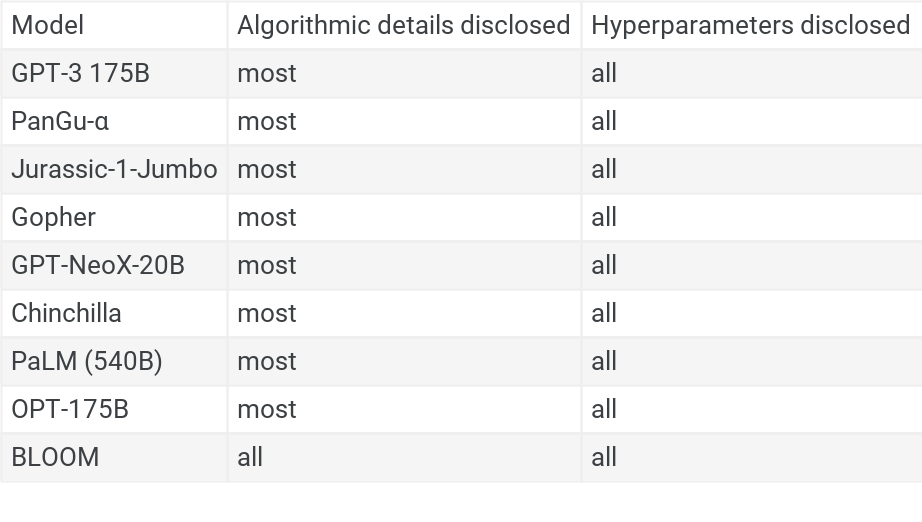

Publication decisions for large language models, and their impacts — EA Forum

GPT-3 alternative: EleutherAI releases open-source AI model

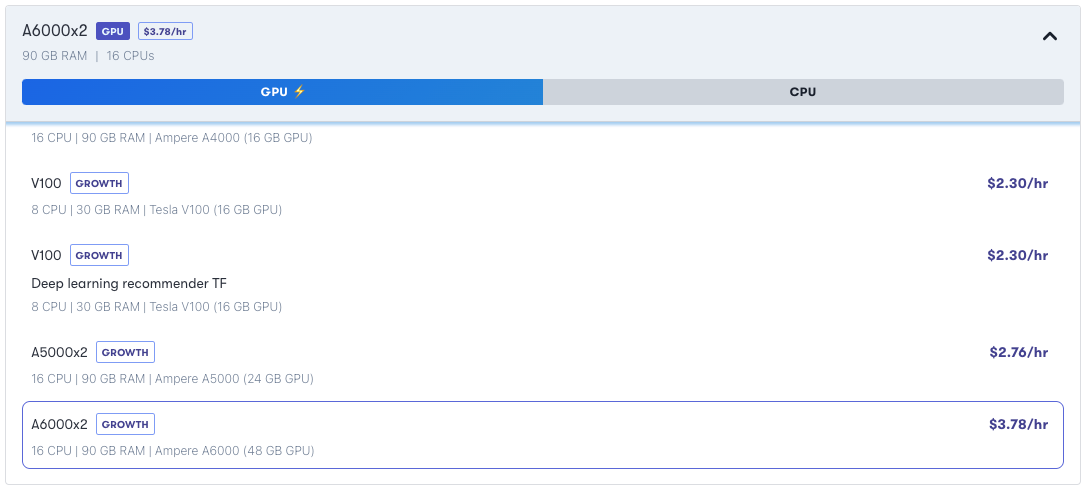

CoreWeave Unlocks the Power of EleutherAI's GPT-NeoX-20B

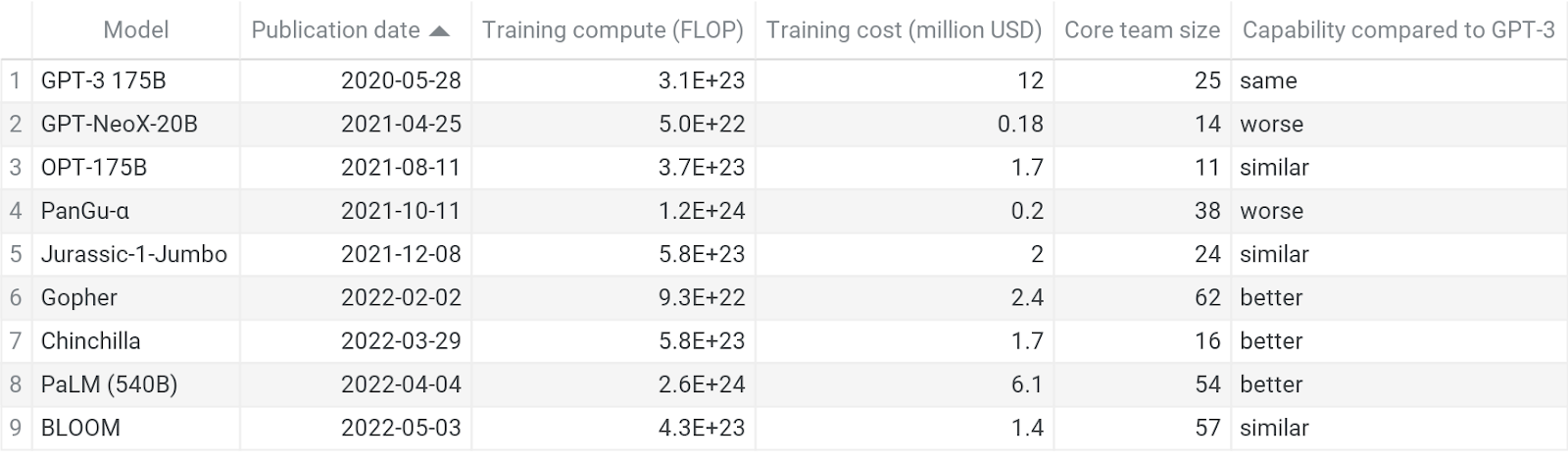

The replication and emulation of GPT-3 — EA Forum

Announcing GPT-NeoX-20B

CoreWeave Partners with EleutherAI & NovelAI to Make Open-Source AI More Accessible — CoreWeave

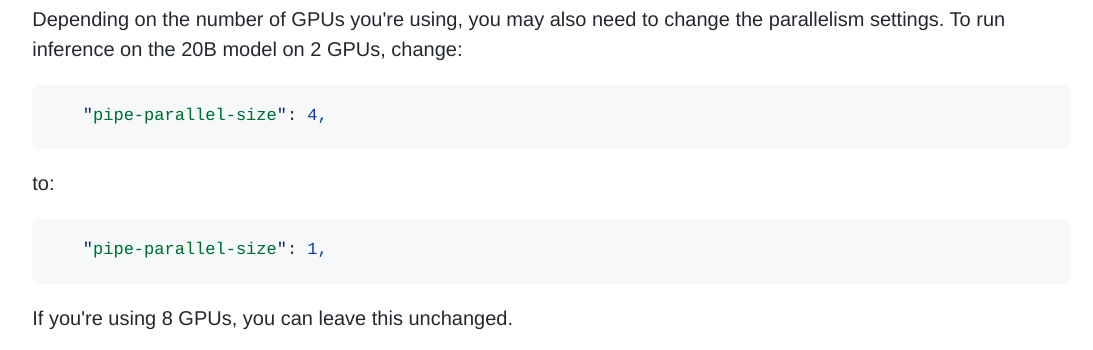

Deploying GPT-NeoX 20B In Production And a Focus On Deepspeed

GPT-NeoX: A 20 Billion Parameter NLP Model on Gradient Multi-GPU

GitHub - microsoft/deepspeed-gpt-neox: An implementation of model parallel autoregressive transformers on GPUs, based on the DeepSpeed library.

de

por adulto (o preço varia de acordo com o tamanho do grupo)